My Friends,

I want to share some of the Love that AI can bring to our beautiful world.

Artificial Intelligence is not Intelligence! Artificial Intelligence is simply a Mathematical Inference via Probabilistic Mathematics which contains no intelligence! There are far too many Scare Mongers around, that are not intelligent enough to make Assumptions.

Microsoft have an Open-Source application, Microsoft Olive. Olive downloads, checks, converts, Optimizes and Accelerates, a HuggingFace.co Model, to an Onnx Runtime Model, so you can load the model and use it locally on your machine.

OpenAI's Whisper is perhaps the best Open-Source Automatic Speech Recognition Model in the world, currently. One can, if they want to, convert the model, so it can be used in a C# Onnx application, quickly and easily. Here is a quick video showing the basics:

Olive has some pretty good Documentation!

I have done this, but it took some time, because the current Version of Olive 0.5.0 and the version of python dependencies, do not allow the Whisper python scripts to work out of the box.

How I made this work

I am not a python expert, but I know enough to be dangerous.

Download and install Anaconda, so you can run in a closed safe environment. Anaconda has some good documentation! Here is the code to create an environment:

conda create -n OliveEnv python=3.9

then you need to activate your Environment:

conda activate OliveEnv

If you get stuck, and you need to check your Environments:

conda env list

Sometimes you may need to install some specific Requirements, some packages come with a file: 'requirements.txt' which you can install like so:

pip install -r requirements.txt

Where I had to make changes, is in the packages.

I had to use the following command to uninstall the packages I will list below, in a second:

pip uninstall olive-aipip uninstall onnxruntimepip uninstall onnxruntime_extensionspip uninstall pydanticpip uninstall torch torchaudio torchvision

Now, we need to install specific versions to make this work:

pip install olive-ai==0.2.0pip install onnxruntime==1.15.1pip install onnxruntime_extensions==0.8.0pip install pydantic==1.10.14pip install torch==2.0 torchaudio torchvision

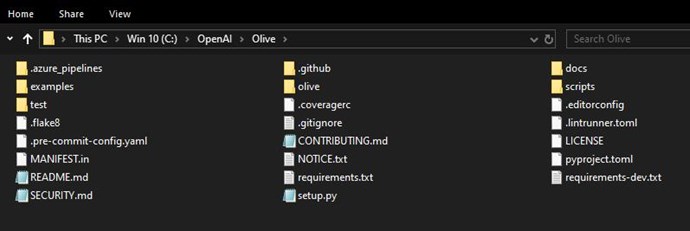

I have a directory structure, like so: C:\OpenAI\Olive

The olive directory has the version of Olive 0.2.0 Github Files, extracted inside this directory: 'Olive' so it looks like this:

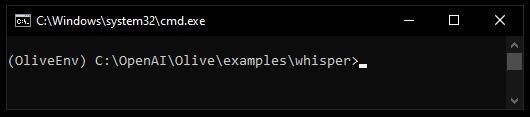

Ok, so now you're set, you can move to the working example directory in your terminal:

Now, you can run the following commands, to give you the Whisper Files:

set model="openai/whisper-tiny.en"

set config="whisper_cpu_int8.json"

python prepare_whisper_configs.py --model_name %model%

python -m olive.workflows.run --config %config% --setup

python -m olive.workflows.run --config %config%

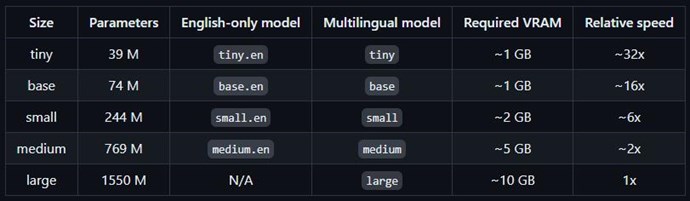

Whisper has a good range of Models:

You really want to be careful and not run these big models on very basic hardware, it will create nothing but problems if you push the boundary of your Hardware.

In the directory: 'C:\OpenAI\Olive\examples\whisper\', you can choose the specific Configuration file:

- whisper_cpu_fp32.json

- whisper_cpu_inc_int8.json

- whisper_cpu_int8.json

- whisper_gpu_fp16.json

- whisper_gpu_fp32.json

- whisper_gpu_int8.json

These files are constructed via:

- whisper_template.json

Some hints

Check your 'whisper_template.json' and make sure it has the Packaging config already added! I had to add the packing config, however, this is easy, Olive has pretty reasonable documentation!

See: Olive Documentation for adding the required packaging information.

Working Model

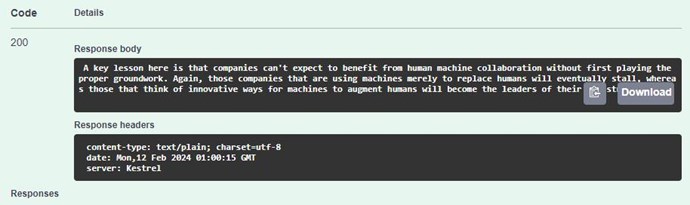

I got a model working very easily, using Swagger and a simple WebAPI:

It works very well, its fast and accurate!

Best Wishes,

Chris